Laboratory for Software Science

Research

Programming languages are how human intentions are translated into machine instructions. One the one hand, we aim to make fundamental advances in how precisely we can formalize and express these intentions to machines (mainly the focus of Ahman & Earnshaw & Nester); on the other hand, it is important to verify that machines reliably perform as expected, verifying their behavior (mainly the focus of Apinis & Vojdani).

Our group is involved in the following national research projects.

Explainable Verification (2025-2029)

Software failures are expensive. Advanced verification techniques, such as abstract interpretation, have been successful in ensuring software reliability in safety-critical domains, such as avionics and space. However, outside such industries, adoption of sound verification methods is hindered by complexity and usability challenges. This project aims to address these issues by increasing trust in automated verification tools while making them more accessible to developers.

- Mathematical Foundations. We need clear mathematical foundations for the analysis of concurrent systems. Traditional methods often lack compositional reasoning, which makes proofs of correctness cumbersome and unclear. Inspired by methods from category theory, we will develop modular and compositional proofs, focusing on thread-modular analyses and the foundations of side-effecting constraint systems. Additionally, we aim to study the foundations of interactive and incremental analysis techniques, which are relevant to our usability efforts.

- Automated Software Verification Algorithms. The engineering of static program analysis tools is inherently complex. We focus on advancing both the theory and practice of automated software verification. This includes developing verified solvers for program analysis, improving the precision and scalability of thread-modular abstractions, and fostering cooperation between different verification tools. A key goal is to ensure that verifiers can exchange information and validate each other’s results through machine-checkable proof objects known as witnesses.

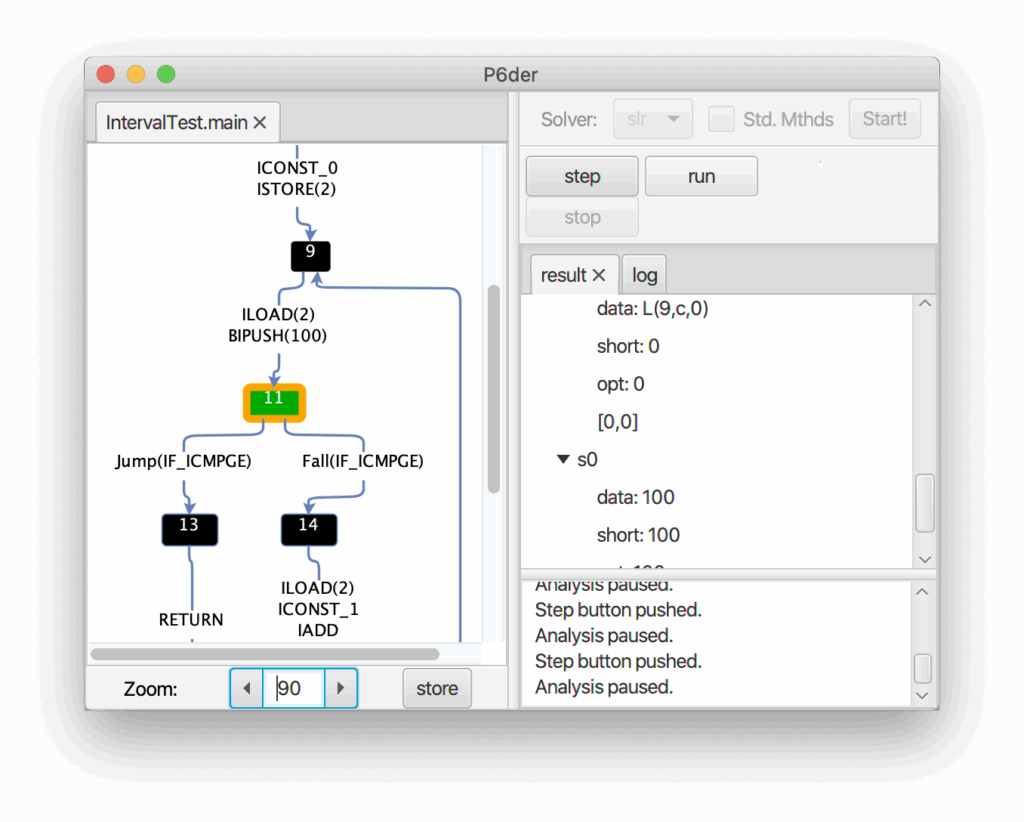

- Explainable Verification in Practice. The practical adoption of sound static analysis tools is often hindered by poor explainability. Here we aim to improve the user-friendliness of verification tools by focusing on making abstract interpretation results more understandable to developers. We will also explore ways to improve the design of user interfaces for verification tools and refine benchmarking processes to better evaluate tool performance and usability in real-world scenarios.

Foundations of Secure Digital Solutions and Artificial Intelligence (2024-2028)

The complexity of digital systems and the widespread use of artificial intelligence in all areas of life increase the need to ensure their correct functioning and security. Serious threats arise from software vulnerabilities, programming errors, unreliability of artificial intelligence, and malicious attacks, which can lead to privacy violations and the spread of misinformation.

The project has brought together research groups in data science (Kull & Vicente), cryptography (Lipmaa), and programming languages (Vojdani) to develop new methodologies for enhancing the reliability of digital solutions. The goal is to create a foundation for evidence-based approaches that ensure the correctness and security of digital systems. These methodologies include abstract semantics, automatic verification of software, verification of delegated computations, zero-knowledge proofs, and enhancing the reliability of artificial intelligence.

Open-Source Projects

By achieving these goals, we hope to promote the mass adoption of sound static analysis. Thus, the most important deliverables of our work are the following publicly available open-source static analysis tools:

- Goblint, a sound static analyzer for multithreaded C that we develop with the Technical University of Munich.

- Põder, static analyzer for Java bytecode, developed by Kalmer Apinis, with an emphasis on explainability.

- Temporal Millet